# Ta-Datax-Writer Plugin User Guide

# I. Introduction

Ta-Datax-Writer is a write data plug-in for DataX, which provides the function of transferring data to TA clusters in the DataX ecosystem. You can deploy DataX on the data transmission services, and use the data source reading plug-in supported by DataX and this plug-in to achieve data synchronization between multiple data sources and TA groups.

To learn about DataX, you can visit DataX's Github homepage (opens new window)

**The method of sending data to the receiving end of the TA for data ****transmission.**

# II. Functions and Limitations

TaDataWriter implements the function of changing from DataX protocol to TA cluster internal data. TaDataWriter is agreed in the following aspects:

- Supports and only supports writing to TA clusters.

- Support data compression, the existing compression format is gzip, lzo, lz4, snappy.

- Supports multi-threaded transport.

# III. Instructions for Use

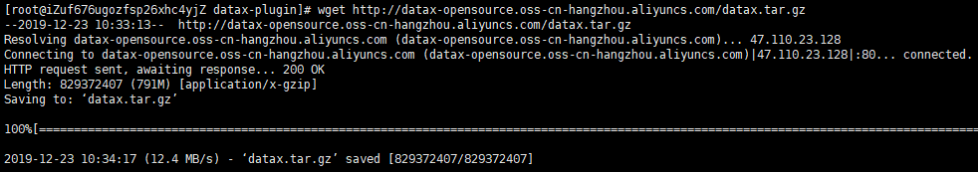

# 3.1 Download Datax

- Visit DataX official website (opens new window)

- Download DataX Toolkit: DataX download address (opens new window)

wget http://datax-opensource.oss-cn-hangzhou.aliyuncs.com/datax.tar.gz

# 3.2 Decompression Datax

tar -zxvf datax.tar.gz

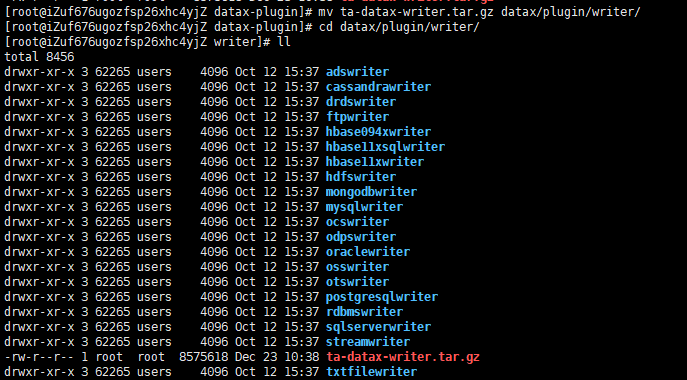

# 3.3 Install the Ta-datax-writer Plugin

- Download the ta-datax-writer plugin: ta-datax-writer download address (opens new window)

wget https://download.thinkingdata.cn/tools/release/ta-datax-writer.tar.gz

- Copy ta-datax-writer.tar.gz to the data/plugin/writer directory

cp ta-datax-writer.tar.gz data/plugin/writer

- Unzip the plug-in package

tar -zxvf ta-datax-writer.tar.gz

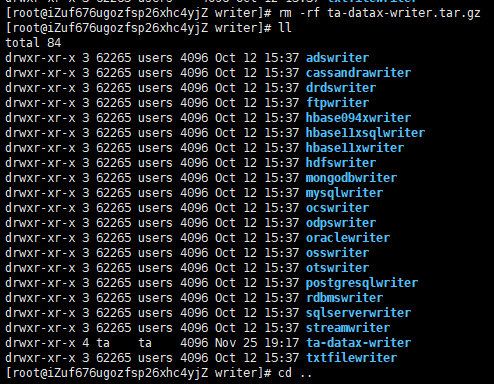

- Delete package

rm -rf ta-datax-writer.tar.gz

# IV. Functional Description

# 4.1 Sample Configuration

{

"job": {

"setting": {

"speed": {

"channel": 1

}

},

"content": [

{

"reader": {

"name": "streamreader",

"parameter": {

"column": [

{

"value": "123123",

"type": "string"

},

{

"value": "testbuy",

"type": "string"

},

{

"value": "2019-08-16 08:08:08",

"type": "date"

},

{

"value": "2222",

"type": "string"

},

{

"value": "2019-08-16 08:08:08",

"type": "date"

},

{

"value": "test",

"type": "bytes"

},

{

"value": true,

"type": "bool"

}

],

"sliceRecordCount": 10

}

},

"writer": {

"name": "ta-datax-writer",

"parameter": {

"thread": 3,

"type": "track",

"pushUrl": "http://{Data Receiving Address}",

"appid": "6f9e64da5bc74792b9e9c1db4e3e3822",

"column": [

{

"index": "0",

"colTargetName": "#distinct_id"

},

{

"index": "1",

"colTargetName": "#event_name"

},

{

"index": "2",

"colTargetName": "#time",

"type": "date",

"dateFormat": "yyyy-MM-dd HH:mm:ss.SSS"

},

{

"index": "3",

"colTargetName": "#account_id",

"type": "string"

},

{

"index": "4",

"colTargetName": "testDate",

"type": "date",

"dateFormat": "yyyy-MM-dd HH:mm:ss.SSS"

},

{

"index": "5",

"colTargetName": "os_1",

"type": "string"

},

{

"index": "6",

"colTargetName": "testBoolean",

"type": "boolean"

},

{

"colTargetName": "add_clo",

"value": "123123",

"type": "string"

}

]

}

}

}

]

}

}

# 4.2 Parameter Description

- thread

- Description: The number of threads is used concurrently within each channel, regardless of the number of channels in DataX.

- Required: No

- Default: 3

- pushUrl

- Description: Access point address.

- Required: Yes

- Default: None

- uuid

- Description: Add "#uuid": "uuid value" in the transmission data, and use it with the data unique ID function.

- Required: No

- Default: false

- type

- Description: The data type written user_set, track.

- Required: Yes

- Default: None

- compress

- Description: Text compression type. Default non-filling means no compression. Supported compression types are zip, lzo, lzop, tgz, bzip2.

- Required: No

- Default: No compression

- appid

- Description: The appid of the corresponding item.

- Required: Yes

- Default: None

- column

- Description: Read the list of fields, type specifies the type of data, index specifies the current column corresponding to the reader (starting with 0), value specifies the current type as a constant, does not read data from the reader, but automatically generates the corresponding value according to the value The column.

The user can specify the Column field information, configured as follows:

[

{

"type": "Number",

"colTargetName": "test_col", //Generate column names corresponding to data

"index": 0 //Transfer the first column from reader to dataX to get the Number field

},

{

"type": "string",

"value": "testvalue",

"colTargetName": "test_col"

//Generate the string field of testvalue from within TaDataWriter as the current field

},

{

"index": 0,

"type": "date",

"colTargetName": "testdate",

"dateFormat": "yyyy-MM-dd HH:mm:ss.SSS"

}

]

- For user-specified Column information, index/value must be selected, type is not required, set date type, you can set dataFormat is not required.

- Required: Yes

- Default: all read by reader type

# 4.3 Type Conversion

The type is defined as TaDataWriter:

| DataX internal type | TaDataWriter data type |

|---|---|

| Int | Number |

| Long | Number |

| Double | Number |

| String | String |

| Boolean | Boolean |

| Date | Date |